好友推荐

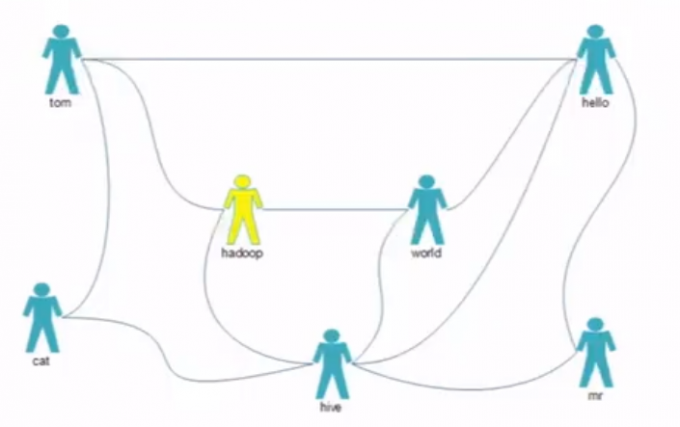

关系图

数据处理

统计数据

以每个人为对象,统计直接关系

1 | tom cat hello hadoop |

处理数据

map阶段输出数据

以key-value的形式,表示两个人的直接或间接关系(0代表直接,1代表间接)

1 | <'tom_cat': 0>, <'tom_hello': 0>, <'tom_hadoop': 0>, <'cat_hello': 1>, <'cat_hadoop': 1>, <'hello_hadoop': 1> |

通过Map函数形成两个人的关系,要注意升序还是降序,确保一致性

reduce阶段传入数据

key中两个人的顺序不同,记为同一个关系

1 | <tom_cat:(0,1,0,0,0)>,<tom_hadoop:(1,1,1)>,<tom_hive:(1,1,1,1,1,1)>,<tom_world:(1,1,1,1,1,1)>, |

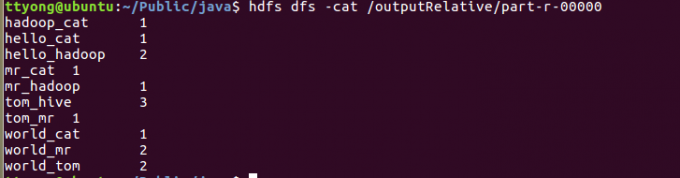

reduce阶段传出数据

传出间接关系拥有的共同好友

1 | cat_hadoop 2 |

代码

Mapper

1 | package sudo.edu.hadoop.mapreduce.recommendFriend; |

reducer

1 | package sudo.edu.hadoop.mapreduce.recommendFriend; |

Main

1 | package sudo.edu.hadoop.mapreduce.recommendFriend; |

结果

对XX_XX后续处理

不需要reduce阶段;对前阶段得到的数据xx_xx进行处理

Mapper

1 | package sudo.edu.hadoop.mapreduce.recommendFriend2; |

RecommentResInfo(序列化,排序,人物类)

1 | package sudo.edu.hadoop.mapreduce.recommendFriend2; |

Main

1 | package sudo.edu.hadoop.mapreduce.recommendFriend2; |

结果

每个人心中都有一团火,不过路过的人只看到了烟。